Agentic panic? New study finds AI agents in production face infrastructure and reliability challenges

Research from Cleanlab reveals that the 5% of enterprises running production AI workloads are rebuilding systems every 90 days – and satisfaction is tanking. As software vendors and enterprises embrace agentic strategies, infrastructure churn and poor performance have become major obstacles. Cleanlab CEO Curtis Northcutt explains what we’re up against, and why it matters.

- Cleanlab, a technology platform for building safer AI agents, has published new research

- The 5% of companies running AI in production are rebuilding systems every 90 days

- Satisfaction rates have dropped below 33%

- Infrastructure instability, reliability, and observability are critical weaknesses

- For software vendors, building on unstable foundations is a challenge

- Human feedback is the key to closing the gap

Curtis Northcutt isn’t your typical Silicon Valley CEO. His Midwestern roots are immediately detectable. It's not his accent that gives it away, but an authentic kindness and hospitality that permeates his perimeter.

“By way of background, I’m from rural Kentucky,” he told me over Zoom from San Francisco, where his startup, Cleanlab, is headquartered. “Mom worked in a call center. Dad is a mailman, actually, and today they still live that way. So that's my actual background, not some story nonsense.”

It was a solitary start, growing up in a place that felt, in his words, lonely and with limited opportunities. Those humble beginnings certainly ignited him to success, but also deeply informed his attitudes, imbuing a strong moral compass and a penchant for the people of Middle America – not just those in the cosmopolitan coastal epicenters.

“I really care about people in the Midwest,” he said. “I live in a big city. It's where AI is right now. But fundamentally, what I cared about was, how do we use technology and actually help people? And AI had the most promise to do that.”

In the classic small-town-kid-makes-it-big narrative, Curtis landed at MIT, powered by his unequivocal brilliance and tacit motivation. During his PhD candidacy in computer science, he built a cheating detection system for both MIT and Harvard to govern their Massive Online Open Courses (referred to as MOOCs).

Cleanlab CEO and Co-Founder Curtis Northcutt. Source: LinkedIn

It’s a fascinating tale and demonstrates the cunning of ambitious cheaters: A single MOOC could enroll thousands of multiple accounts, allowing a single learner to “harvest” correct answers to earn a perfect score and certification. The strategy, called CAMEO, was quite the hustle, but Curtis and his colleagues used click-stream data – the record of what MOOC learners do while logged in – to uncover the method.

That same innovative thinking has guided Curtis to the highest levels of academic achievement, earning a doctorate and garnering over 1,500 citations. He was also the recipient of MIT’s coveted Morris Joseph Levin Award for his Masterworks Thesis Presentation.

Machine learning became the central focus, and Curtis populated his resume with the likes of Amazon, Google, Oculus, and Microsoft. Across this field of experience, he worked tirelessly on the same core problem: making AI work on messy, real-world data that was piling up in every direction.

“The problem was, we had an AI thing that was OK,” he explained. “But wasn’t actually helping much because it couldn’t work that well on noisy, badly labeled, problematic data.”

Driven by his focus on ML and the emergence of modern AI, Curtis went on to develop what he called Confident Learning in 2019, a novel approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. The concept was so impactful that it earned the Journal of Artificial Intelligence's 5-Year Test-of-Time Award.

We’re getting into pretty heady stuff as it relates to topics like quantifying ontological class overlap, but as Curtis noted in his paper on the topic, the production of cleaner data for training resulted in significant improvements in model accuracy.

That was the birth of Cleanlab – an open source, data-centric AI package for “cleaning” data and labels by automatically detecting issues in an ML dataset. It tackled the reality of unstructured data, using existing models to estimate problems that can be fixed to train better models and improve reliability across supervised learning, LLM, and RAG apps.

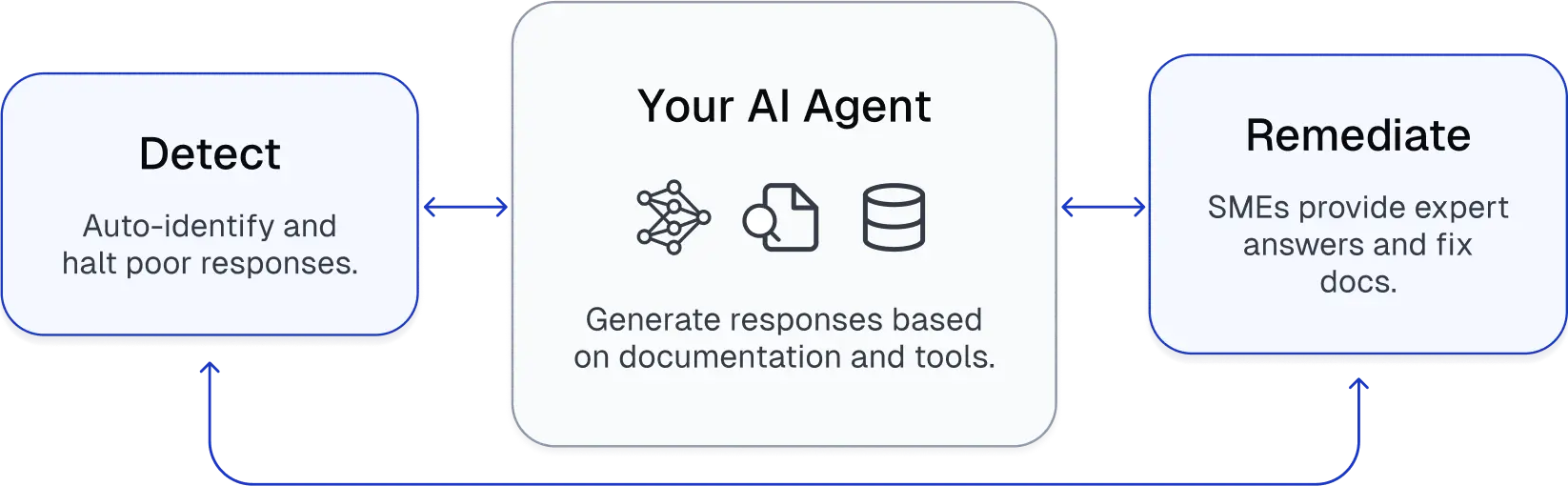

Cleanlab helps enterprises detect and remediate AI mistakes, knowledge base issues, and more. Source: cleanlab.com

Today, Cleanlab pitches itself as the “reliability layer” for enterprise AI, giving teams the tools to make agents production-ready with the right controls. Its blend of technology and practices helps organizations detect low-quality outputs, identify root causes, improve response quality, and apply the proper guardrails to ensure safe, accurate, and compliant performance at scale.

Cleanlab is certainly on a roll. Last year, Curtis led his team to a $25 million Series A from the prestigious Menlo Ventures and Bain Capital – demonstrating just how valuable his contributions have become to the future of AI. It also counts global brands like Amazon, Google, and Databricks as customers.

Agent alert: Risks ahead

While that might seem like a lot of preamble, it establishes Curtis as one of the leading minds shaping the realm of agentic AI. His credentials – and human focus – reinforce Cleanlab’s credibility in this burgeoning space.

Here’s why it matters: Over the last year, I’ve written extensively about the “agentic ambitions” of CMS, DXP, and enterprise customers, each showcasing the potential of their systems and agents. In the AI arms race, agents are at the front line, and a lot is riding on their success. That’s why we need smart people analyzing this space.

Case in point: Recent research from MIT has cast a shadow over the expectations of Gen AI projects, suggesting 95% of projects fail before reaching production. But for the 5% that break through, there are still mountains to climb and cliffs to fall from.

But that was just the beginning. Curtis wanted to go deeper, so Cleanlab partnered with a survey platform and Menlo to do some digging. The data produced was compelling: even among this small faction of companies with AI systems in production, there are major challenges around infrastructure stability and reliability, and a widening gap between the AI adoption narrative and the reality enterprises face.

The research, titled "AI Agents in Production 2025: Enterprise Trends and Best Practices," surveyed 95 engineering and AI leaders from the estimated 5% of organizations that have moved AI agents beyond the pilot stage and into real production environments.

The study surveyed 95 professionals across industries and company sizes from January to August of 2025, focusing on leaders already operating AI agents in production with live user interactions – so no pilots or proof-of-concept stages. Research included regulated sectors like financial services and unregulated categories like consumer markets.

According to Curtis, part of the challenge rests with a widening gap between what the industry calls “AI agents” and what agents need to do. Most aren’t executing the kind of heavy, hype-worthy use cases that AI vendors are promoting. The ability for agents to perform longer, more complex tasks is signal of their limitations, something I recently discussed with Treasure Data’s CEO Kaz Ohta.

“Real agents orchestrate work across multiple systems, pulling data from disparate sources,” Curtis said. “Most deployments aren't there yet, and that's a key reason governance hasn't become urgent for many organizations."

The metrics are illuminating. Given the revelations, Curtis feels that the enterprise agent market might be further off than the hype suggests, with timelines being inflated – and that truly reliable enterprise agents are more likely a 2027 story than a 2026 reality.

Let’s look at the metrics. There’s a lot to unpack, but here are some of the highlights.

Infrastructure is a failure point

First, infrastructure stability has become this sort of “new normal,” with 70% of regulated enterprises rebuilding their AI agent stack every three months – or even faster. The same is true on the unregulated side, with 41% of organizations reporting a similar rebuild frequency.

These numbers are shockingly disruptive, and there’s certainly an unexpected price tag associated with all this refactoring. This rapid churn also reflects how quickly models, frameworks, and infrastructure evolve, with systems becoming obsolete in a single quarter.

Reliability sucks

As you might surmise, this lack of reliability is a real vibe killer, with fewer than 30% of teams reporting satisfaction with observability and guardrail solutions. In fact, half of production teams are actively evaluating alternatives to their current reliability tools.

I’ve been harping on observability in CMS and DXP throughout this agentic run-up, but Cleanlab’s data reflects that these vital layers of insight and evaluation are the lowest-rated components of the AI infrastructure stack. That’s a real problem with priorities.

Production systems just aren’t ready

One of the biggest challenges rests with the reality of production systems, which we’ve already codified as being early-stage. The lack of maturity, knowledge, and agent best practices is surfacing in risky ways – with only 5% of engineering leaders citing accurate tool calling as a top technical challenge.

As Curtis noted, tool calling in particular is one of the core tenets that defines an agent’s functionality, and this lack of prioritization is a telling signal. “If you’re not concerned about tool calling, you’re not building an agent,” he said. “You’re just taking an LLM and outputting some stuff.”

Additionally, document processing and customer support augmentation represent the dominant use cases, which are low-level tasks. This indicates most production AI is still focused on surface-level jobs rather than deep reasoning or sophisticated tool use.

Improving visibility is a critical goal

According to the research, visibility has become the top investment priority for organizations as they bolster their agentic strategies. 63% of enterprises plan to improve observability and evaluation capabilities in the next year, while 62% plan to add feedback loops and learning mechanisms to improve output performance.

On the governance side, there’s also momentum – although not nearly enough. Some 42% of regulated enterprises plan to add oversight features (things like approvals and review controls) compared to only 16% of unregulated companies.

Hybrid is the way

When it comes to deploying agentic systems, the research suggests that hybrid approaches are the preferred and dominant models. Few organizations rely entirely on vendors or build everything in-house; most combine internal infrastructure with external tools. Regulated enterprises, however, do show higher adoption of open source model fine-tuning.

The procurement ‘time bomb’ is ticking

When it comes to AI and agentic systems, one of the most important and least discussed barriers is enterprise procurement. As we’re acutely aware, AI is evolving on a weekly or even daily basis. But large enterprises historically procure software tools and platforms with annual terms in mind.

By the time a deal is finally ready, the tech – and sometimes even the problem framing itself – has fundamentally changed. Curtis sees this as a challenging hill to climb, particularly in regulated industries like finance.

“If we want to close a big bank, it’s going to be about six months to do the first pilot, then three months evaluating if they can build it internally,” he explained. “Several of our enterprise deals took two years, and now that seems insane. We don’t even live in the world we lived in two years ago. So the deal they were evaluating is no longer the deal we’re doing today.”

Curtis’s recommendation is blunt: Organizations need to change their internal operational structure to handle vendors in as little as two to four weeks. That might be a tall order, but as he suggests, waiting longer means risking significant change to the underlying fundamentals – and perhaps even missing opportunities to realize impact.

Enterprises that create friction around this mindset could also give up competitive ground. In his mind, it’s no longer an option to live inside long-term, rigid lifecycles for procurement. That might put a lot of pressure on large organizations, but the risks are significant.

“The people who are thinking, ‘It’s our culture to take several years’ are going to lose in the long run,” he said. “Every technology you have will be two years older than your competitors.”

Build, buy, or outsource? The AI strategy dilemma

From chatbots to search agents, the market is flush with myriad AI solutions. But the reality is that many of the visible and successful AI vendors are, in practice, focusing on services.

Compounding the lack of purpose-built technology is the pervasive morass of “AI washing,” where lackluster solutions have been marketed with inflated expectations. These challenges can create dangerous dependencies if you’re an enterprise, particularly as it relates to the value of deploying an agent system.

“You can’t pay a services company to take your critical path to revenue and give that away,” Curtis explained. “As soon as you do that, your engineering team can no longer build the core thing that is the function to revenue for your business.”

Curtis lays out a more sustainable enterprise playbook. First, hire AI engineers – aggressively. As he recommends, your budget should be 50/50 mix of software and infrastructure engineers to address the core and underlying challenges. He also advocates for buying real products, not endless pilots that lead to long decision cycles and two years of engineering time.

“Don’t do 10 pilots that take two years and then buy one at the end,” he said, suggesting that buying three today might save you time and money in the long run.

Services still have a place, but they should be used sparingly and reserved for non-core functions. As Curtis explained, for anything on your critical path to revenue – like a brand-defining customer experience – you should own the AI capability and not outsource it entirely. He said working with third-party vendors will be part of every ecosystem, but you also have to be AI-native internally.

The evolution of jobs: Agents and the future of work

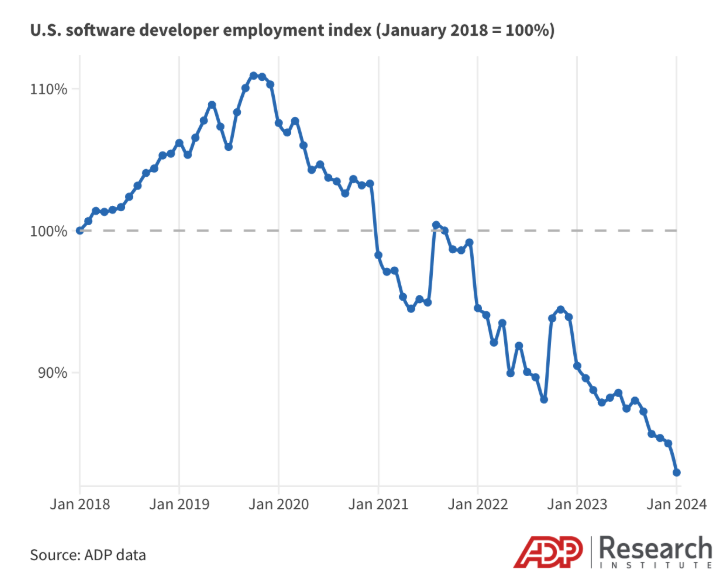

As a leader in the AI space, I asked Curtis about the stark realities of displacement, specifically for developers and engineers. As I’ve noted in my own research at several conferences, there’s been a precipitous decline in job creation for developers going back to 2019, when the market peaked. Some of the recent losses have been attributed to “rightsizing” and outsourcing in a post-pandemic world, but AI is also being blamed for the drop.

U.S. software developer employment index. Source: ADP Research

Over the same period, the exponential growth of Github repos focused on Python, PyTorch, OpenLLaMA, and other AI-centric frameworks presents clear evidence that AI has become the core of modern development, but also a testament to automation impacting how developers work – from copilots to vibe coding tools.

Despite all the doom-and-gloom narratives, Curtis is adamant: AI is changing jobs, not eliminating them wholesale. During our conversation, he shared a use case where Cleanlab deployed AI into a large customer support org, and the human agents were empowered by the software – allowing them to transform their roles. Instead of answering every ticket manually, agents now manage and improve responses.

“Their job has become looking at what AI says and leveraging it to instruct and teach them to be better,” he said. “You end up becoming more of a PM or a manager of the AI responses.”

As Curtis indicated, headcounts may shrink over time through attrition, but the remaining roles will be more skilled and strategic. And those people will have a critical role to play as AI agents still require humans to define goals, set guardrails, and define proper governance.

“It still takes a human to decide what’s the job for the robot,” he added. “It’s the same with the digital world. It’s about controlling, monitoring, and improving these digital robots called agents. And those are new jobs.”

What’s next for enterprises

Over the last few years, there have been plenty of “panic” moments in the generative AI sprint. From hallucinations to prompt injections, trust has been a hard-fought battle. But adoption is clearly growing, and there’s no better indicator than ChatGPT becoming the world’s most downloaded app in 2025.

Now, as agentic AI enters a phase of hypergrowth and the U.S. and China pour billions into innovation, the race is becoming tighter. With a sliver of enterprises running AI agents in production, we’re still early stage – but that’s when the cracks often appear.

This report was beyond enlightening. Like APIs, we don’t spend enough time talking about the security and stability issues that often exist in plain sight. Cleanlab’s research highlights the fundamental challenges facing the agent frontier and exposes risks that even the most successful AI workloads might be experiencing.

“This isn't about humans versus machines, it's about building systems where humans and AI make each other better."

The survey’s responses pull back the digital curtain, revealing the gulf between simple metrics on AI agent deployment and the operational realities at play. Even in organizations that have moved AI agents into production, there are constant infrastructure rewrites, low satisfaction with available tools, and a focus on basic use cases. It all suggests that the tech stack is far from mature, and for current AI deployments, success is relative.

What can we do about it? Cleanlab offers some prescriptions:

- Enterprises need to be more proactive and plan for iterations, not just stability. This is where a modular approach to architectures can really deliver.

- As the report details, it’s important to invest early in reliability layers. One of the chief complaints with respondents was weak observability, which prevents scale regardless of the model or its quality. There’s even a pervasive lack of prioritization to employ such layers, which is a huge problem.

- Software vendors – like CMS and DXP platforms – might be struggling with the challenge of building on unstable foundations. With stacks evolving faster than organizations can standardize, vendors need to be flexible, designing for modularity and rapid iteration over long-term lock-in.

- Market maturity is one of the major headwinds. As the research reflects, most agents are still early-stage. Vendors who can deliver systems capable of deeper reasoning, accurate tool use, and transparent operation under real-world conditions will differentiate their value.

Curtis was clear that human feedback is the key for enterprises. We’re fond of saying that we need a “human in the loop,” but it’s true: technology alone can’t close the rift between experimentation and dependable operation.

"The strongest signal in our research is the ability to learn from human correction and oversight is what separates experimental AI from dependable AI,” he said. “This isn't about humans versus machines, it's about building systems where humans and AI make each other better."

There are bright spots in all of this, the biggest being governance. This is something regulated industries are accustomed to, and as such, they’re embedding human oversight directly into their workflows. But that’s still a smaller portion of the market – so there’s still a lot of work to do.

What it matters

Headlines can do their best to fuel AI pessimism, but there’s so much promise on the agentic horizon. Throughout the year, I’ve seen modest and even significant gains with AI from vendors across the digital experience space, and there’s a bigger note of optimism.

That said, success will require a closer examination of our agentic systems and how they’re future-proofed to scale. That means zeroing in on the critical infrastructure and reliability challenges that Curtis is evangelizing. Here are some key takeaways from our conversation – and Cleanlab’s research:

- Reset expectations: Agents in 2025 are largely experimental, and many aren’t ready for scale.

- Rethink procurement: Accelerate your decision-making to stay ahead.

- Invest in people: Rebuild org charts around AI engineering and AI-aware skills.

- Own your core: Don’t outsource AI where it directly touches revenue and brand.

One thing the MIT report revealed is how friction plays a critical role in achieving AI success. If enterprises follow the right path – and not the easy one – they can impact AI performance and define a roadmap for what needs to change, so agents can truly work at scale and meet real expectations.

Infrastructure is a huge component. As the report says, the challenge isn’t building an agent, it’s building on a surface that doesn't stop moving. Accommodating for this motion requires smart people and AI savviness, something Curtis and Cleanlab are keenly focused on. And the timing is kismet as we look ahead to the uncertainty of a new year – flush with new opportunities and challenges.

"The trough of disillusionment is coming in 2026, and honestly, that's healthy,” he said. “It's when we stop believing the hype and start doing the hard work.”

The message is clear: Don’t give in to the “agentic panic.” The organizations that use this period wisely will be positioned to lead when the technology catches up to the ambition.

For the complete research, download the full report, "AI Agents in Production 2025: Enterprise Trends and Best Practices."

Upcoming Events

CMS Kickoff 2026

January 13-14, 2026 – St. Petersburg, FL

Meet industry leaders at our fourth annual CMS Kickoff – the industry's premier global CMS event. Similar to a traditional kickoff, we reflect on recent trends and share stories from the frontlines. Additionally, we will delve into the current happenings and shed light on the future. Prepare for an unparalleled in-person CMS conference experience that will equip you to move things forward. This is an exclusive event – space is limited, so secure your tickets today.

Umbraco Codegarden 2026

June 10–11, 2026 – Copenhagen, DK

Join us in Copenhagen (or online) for the biggest Umbraco conference in the world – two full days of learning, genuine conversations, and the kind of inspiration that brings business leaders, developers, and digital creators together. Codegarden 2026 is packed with both business and tech content, from deep-dive workshops and advanced sessions to real-world case studies and strategy talks. You’ll leave with ideas, strategies, and knowledge you can put into practice immediately. Book your tickets today.