Backoff on the AI Layoffs: Domo’s Chris Willis on the Future of Work in 2026

Despite headlines that companies are replacing workers with AI, many have been quietly rehiring the same roles. As Domo’s Chief Design Officer and futurist Chris Willis explains, leaders are moving too fast on the promise of AI. Here’s what he sees coming – and what it reveals about the future of our work.

I remember taking my son to see the movie WALL-E in an air-conditioned theater during the summer of 2008. He was still in “tot” territory, barely four, and an unstable entity after 20 minutes of sitting anywhere.

But hey, this was Pixar – the animation studio with an uncanny trance-like power over young attention spans. Old ones, too. Have you seen UP? Grab the Kleenex. It’s a doozy.

I’m happy to report that he made it through WALL-E, completely unaware of the dystopian overtones that enveloped the narrative. If you’re not familiar with the plot, let’s just say it’s about a couple of robots on a scorched Earth with way more humanity than the people they’re trying to save.

On the surface, it was whimsical fare. There was almost no dialogue, and the characters were charming and lighthearted. Perfect for kids. But for the adults in tow, it was an unabashed critique of our rampant consumerism and the vicissitudes of an ecological nightmare run amok.

What fascinated me then – and more now – was the implication that, at some point, humans would collapse into a state of apathy, victims of our own insatiable appetites. Almost 20 years after its release, it feels more relevant than ever as automation threatens every dimension of our existence, from driverless cars to delivery drones to humanoid robots performing manual tasks.

Did we receive the signals? Heed the lessons? Methinks not.

When I was at Sitecore Symposium this past November, I saw the latest predictions from the AI Marketing Institute’s Paul Roetzer, who sees 2027 as the “year of the robot” (not to be confused with the “year of the rabbit"). This prediction is owed in part to OpenAI’s Sam Altman, who sees a gentle singularity underway, one where robots will be handling real-world tasks that will scale exponentially.

The question, of course, is simple: if robots are handling all of our jobs, what the hell is left for us to do?

We don’t need to wait until 2027 to absorb the potential impact of this seismic shift. The displacement is upon us. As generative AI spews forth an entire workforce of agents – digital workers that are ambient, autonomous, and never interested in a coffee or a smoke break – companies have seized the opportunity to replace certain roles with AI functions.

It’s not hyperbole. CEOs boldly claimed that their workforces would shrink. Economists warned of the disruption, and employees felt grim about the future.

In the vulnerable tech sector – where leaders like Satya Nadella have claimed AI could eventually replace millions of jobs – Amazon, Microsoft, Salesforce, and IBM actually trimmed their headcount. Big time. According to consulting firm Challenger, Gray & Christmas, almost 55,000 job cuts in 2025 were directly attributed to AI.

On a global scale, the World Economic Forum estimated that 85 million jobs would be displaced by the end of 2025, with administrative and customer service roles at the greatest risk.

It's 2026. Did the mass wipeout ever happen?

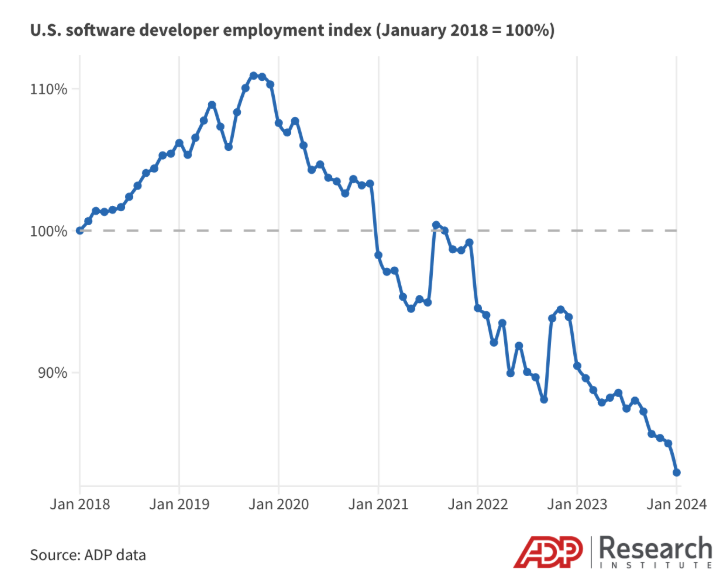

Not exactly. The picture can be a bit misleading. Case in point: I've been monitoring the deceleration of jobs in the development sector over the last two years, which began a precipitous slide way back in 2019.

Despite the irrational exuberance of COVID-infused digital transformation, the numbers show a stark reality: developer roles are disappearing, and the recent plunge has been directly attributed to the rise of copilots and GenAI assistants. Even in late 2024, Google CEO Sundar Pichai said that over 25% of Google’s code was already being written by AI – with more automation on the way.

Source: ADP Research

Over the same period, I cross-referenced this trend with data from GitHub, which revealed an explosion of new repos focused on Python, PyTorch, Ollama, and other frameworks associated with AI development. So while new job creation was nosediving, developers were innovating and upleveling.

Perhaps the prognostications of doom are better framed as a reset than a replacement. Workers are getting smarter across AI competencies. Employment in AI-exposed jobs actually rose 1.7% between mid-2023 and mid-2025, with AI fluency and literacy skills being rewarded with increased wages.

As for the companies that eliminated human workers and assigned an email address to an AI agent? They’ve been quietly rehiring some of the people they let go.

What does this all mean for 2026 and the future of our work? As professionals in the content management and digital experience field, we’ve been the proverbial canaries in the AI coalmine. Generative AI has been passed through our tools like a gastrointestinal flu, but we’re finally seeing high utility hope in the agentic era. Along with that, the associated jobs are evolving.

As a point of comparison, I talked to many practitioners last January about the rampant fear consuming the creators and developers in our field, but the outlook has been decidedly more positive this year. That’s a refreshing way to start 2026 – even if it’s still flush with uncertainty.

In this brave new world of AI, understanding its evolutionary impact on our work is vital. To gather some industry-leading reflection on the topic, I recently spoke to Chris Willis, Domo’s Chief Design Officer and resident futurist. And on this topic, who better to talk to than a futurist?

Domo’s Chief Design Officer and Futurist, Chris Willis. Source: LinkedIn

As one of the early foundational thinkers behind Domo – which, in many ways, is like a CMS for data – Chris has always thought about the relationship between people and machines. He doesn’t see a world where WALL-E is taking over our day-to-day human operations, but he does see the opportunity for tomorrow’s workers to be augmented by AI in positive ways.

As Chris told me, AI didn’t eliminate work. In fact, it revealed how much intuition, oversight, and decision-making were actually required to make AI safe and effective. It might not be a Pixar-worthy plot, but it says a lot about our most important job in this changing world: tapping our humanity.

‘The Great AI Layoff’

Over the last two years, AI has become an investment darling and strategy panacea for our collective growth woes. Add the acronym to a pitch deck, and you could prune money from trees. Toss it in a press release, and editors were certain to pick it up.

2026 is already feeling markedly different. The street has poured a lot of cash into AI ventures, and according to a widely published MIT study, only 5% of projects manage to reach production – and who knows how few have ultimately translated into success. It's still too early to measure the full impact.

Despite the hard realities of ROI and illusory expectations, business leaders leaned hard into automation last year to justify job cuts, betting that generative models and copilots could take over large chunks of knowledge work.

Like the market data I’ve already detailed, Chris thinks the layoff bet is backfiring. Aside from investors looking for higher gains from RIW (reduction in workforce), the optics ultimately suck for brands that openly boast about AI displacement. Heck, McDonald’s had to pull an AI-generated ad because consumers weren’t exactly lovin’ it. The AI backlash is real.

But as he told me, the core problem isn’t the technology, it’s the way leaders are using it to reshape their org charts. In his mind, they simply moved too fast and eliminated critical roles – not because AI isn’t capable of delivering a modicum of value, but because they misunderstood what it’s good at. And, more importantly, where people hold the real value.

“A lot of companies kind of rushed to automate and then realized that the automation aspects, just bringing AI tools into the business, was really the easy part,” he said. “The hard part is judgment, and that's an essential human skill, and I don't see models as possessing that.”

At least not yet. Reasoning is sort of a “final frontier” in the model landscape, and last year saw some serious friction. In June, at WWDC, Apple put the brakes on its large reasoning model (LRM) initiative, citing systemic collapse when trying to solve really complex problems. In essence, they didn’t yield any remarkable benefits over LLMs that operate downstream.

But resting our hopes on what AI might be capable of isn’t the right prescription, and businesses are waking up to the realities of what’s possible now and how it can be harnessed. This relationship between people and machines is unlocking what Chris sees as the real value in a human/AI partnership – one where people are in control. This is where businesses need to optimize the equation.

“Instead of doing more work, we're going to be steering more work,” he said. “And I think that's the essential idea that a lot of companies are starting to realize. That it’s really about a human‑AI collaboration, and that has to be intentional and built-in.”

The myth of ‘AI Efficiency’ and why downsizing is a downer

The first wave of AI-driven layoffs has largely been framed as an efficiency play – and a bold experiment in AI’s potential. The benefits were intoxicating: if a model can write marketing copy, summarize reports, or generate code, why pay people to do these jobs?

Chris argues this is natively misaligned – and it doesn’t fully address the hard stuff that businesses wrestle with. Generating artifacts is easy. Getting to reliable, outcome‑driven decisions? Not so much. And AI’s output isn’t evenly extensible across every dimension of business operations.

“Leaders kind of simply extrapolated [AI] to the rest of their business,” he said. “‘Well, if it helped me write this email, why can't it help me with strategy? Or why can't it just write a new content marketing plan?’ Well, because the models don't truly understand outcomes. They don't have that kind of judgment.”

And they don’t care about those outcomes. They’re biased. Inherently optimistic about everything. And completely lacking in genuine empathy. That’s risky given how much reliance we’re developing for these tools.

“A lot of the tools being built are backwards. It's like they're trying to provide a cure for thinking.”

As Chris told me, we’re now seeing AI make it easier for people to weave outside their lane, treating it as a replacement for expertise and intelligence. In this way, we’re letting it accelerate our work without having to critically think or validate the output.

“A lot of the tools being built are backwards,” he said. “It's like they're trying to provide a cure for thinking.”

With this human brain drain, there’s real risk at play, particularly in the realm of content. If organizations cut too deeply into roles that anchor strategy, quality, and risk management, they end up with an explosion of AI‑generated output – and less people with the time, mandate, or expertise to verify whether any of it is directionally accurate. This is where AI downsizing can begin to show up as:

- Rework and hidden tech/content debt

- Brand, legal, and security risks

- Slower decisions, not faster ones

The result, Chris suggests, is an “AI hangover” of sorts: lots of AI tools and technologies, fewer people running them, and less leverage over the outcomes.

“Some companies are saying, ‘Okay, now what?’” he said. “That's where the work is. And I don't think people realize how bringing in human judgment, creating new kinds of tools that actually help us steer AI – that’s the hard part.”

The roles companies realize they still need

As organizations run up against the limits of a “do more with less” AI mindset, that quiet rehiring pattern has picked up steam. That said, the roles that are returning have evolved, often imbued with more AI-forward skills and expectations. Improved AI literacy is also helping people guide, constrain, and connect AI to real business outcomes.

Chris doesn’t describe this as rehiring in so many words, but he’s clear about the kinds of capabilities that turn AI from a novelty into an asset – things like architecture, orchestration, guardrails, and governance. These map directly to the kinds of roles that have proven too costly to lose.

“The best engineers are the ones who can understand, architect, and work with AI in a very, I would say, intimate level, to begin to understand where that jagged frontier is – where it does really well here and then fails with a slightly adjacent problem.”

One of the consequences of the speed of AI adoption has been the amassing technical debt, something we need qualified people to help remediate. As Chris said, we’re generating greater potential risk, opening ourselves up to exploitable gaps and attack vectors.

“Anyone who's done real development knows that the difference between a proof of concept and a production‑ready thing is sometimes a very long, arduous process,” he punctuated. And it’s true: a layperson can vibe code a prototype into existence, but they still need qualified engineers to take it across the finish line as a performant solution.

Translating that into hiring signals, the roles that are coming back include:

- AI‑literate architects and senior engineers who can separate proof‑of‑concept from production reality

- Content and data stewards who understand provenance, chain of custody, and trust in an AI‑remixed world

- Product‑minded practitioners (in marketing, ops, analytics) who can define outcomes, specify constraints, and evaluate AI behavior over time

To be fair, these aren’t the same job descriptions that were necessarily cut over the last two years. They’re more hybrid, cross‑functional, and often focused on orchestration over execution. But they are, in many cases, being filled by the same kinds of people who were shown the door when “automation” became the mantra.

“Organizations up to this point have been designed as functional hierarchies,” Chris said. “Those functional hierarchies are designed to coordinate tasks, to orchestrate tasks. Right now, I think our big challenge is orchestrating intelligence and collaboration around intelligence – that's a different model.”

Workers in 2026: From task doers to work directors

So what does all this mean for workers in 2026?

For Chris, the shift looks structural: work is moving, as he said, from doing to steering. From task execution to outcome orchestration.

Perhaps the greatest near-term risk is that workers can get caught in the middle of this melee. Jobs might be replaced by tools that do more of the heavy lifting, but workers might miss the essential re-skilling into the judgment roles that actually matter.

“The question is, can you do this while up-leveling skills rather than de‑skilling people?” Chris asked. “That's the rocket ship versus the couch model. Like, ‘Hey, I put in something and it gives me an answer, but I didn't get any smarter.’”

This is certainly relatable to the CMS world, where content editors and practitioners are grappling with the evolving definition of content operations in a new AI-powered paradigm, one where content is transforming across a multitude of channels. In this new “content supply chain” ecosystem, the job roles are being rapidly rewritten with little training or defined expectations.

On the developer side of the equation, it’s a move away from line‑by‑line coding toward architecting systems that combine probabilistic and deterministic components, with an eye on failure modes and observability.

“Companies thought, let's bring in all these AI tools, and then we're going to give them to people, and we're either going to automate away a bunch of work, or our people are going to become innovators overnight. We entered the ‘Year of Magical Thinking.’”

The workers who thrive in this environment will treat AI as a collaborator, not a replacement – or a crutch. By learning to specify problems clearly, define guardrails, and interpret outputs, they can stay ahead of change in a more meaningful way.

But the responsibility doesn’t sit with individuals alone. Chris was blunt in his assessment that most organizations aren’t set up to support this kind of shift – yet.

“Companies thought, let's bring in all these AI tools, and then we're going to give them to people, and we're either going to automate away a bunch of work, or our people are going to become innovators overnight,” he said. “We entered the ‘Year of Magical Thinking.’”

In 2026, workers are living with the consequences of that magical thinking: whiplash surrounding job stability and expectations, uneven AI adoption, and emerging demand for skills that didn’t really exist just a few quarters ago.

Success rests with preparedness, and organizations that invest in literacy surrounding things like data quality, provenance, and evaluation – and not just prompt tricks – will have a better shot at facing uncertainty in the AI landscape.

Building a new ‘skill tree’ with strong roots

I’ve bought and sold businesses over the course of my career and navigated the rugged terrain of multiple recessions. During these periods, leaders are often faced with the conundrum of letting go or retaining talent, with the expectation that things will inevitably turn around. Of course, AI has changed the calculus.

Underneath the hiring and firing cycles is something deeper: most companies simply don’t have the organizational “skill tree” required to use AI responsibly and effectively.

Chris laid out the roots of that skill tree as four big branches – conditioning, authority, automation, and compounding gains. Together, they balance a new equation that organizations are going to have to wrestle with as they approach a hybrid environment for people and AI.

“They’re going to have to build a new skill tree,” he said. And for hiring, that implies a very different way of thinking about roles:

- Conditioning: People who can translate domain expertise into context, constraints, and evaluation signals for AI systems

- Authority: People who own the integrity of content and data across its full lifecycle, in a world of endless remixing

- Automation: People who design and maintain workflows that blend models, rules, and humans in the loop

- Compounding gains: People who can turn one‑off experiments into durable capabilities, with governance and measurement baked in

Organizations that cut these roles in the pursuit of efficiency end up paying twice: once in disruption, and again when they discover they still need this work done – only now with greater urgency and less internal memory.

Can leaders avoid making the same mistake twice?

If the first wave of AI layoffs was driven by magical thinking and a shallow view of efficiency, what does a better second wave look like?

As Chris shared with me, it starts with humility. The fact is, we’re all swimming in AI anxiety. Even tech luminary Andrej Karpathy – co-founder of OpenAI and former director of AI at Tesla – recently admitted on X: “I’ve never felt this much behind as a programmer.”

Source: X

“If the guy who designed and built these things feels behind, I think the anxiety that we feel is real and warranted,” Chris said. “But the next thing is, what tools do you have to navigate that? I think we're at a moment where we have to think about these things in a very intentional way.”

For leaders, avoiding a second round of self-inflicted damage means:

- Stop treating AI as a headcount substitution model. Treat it as a shift in how work is organized – away from functional hierarchies that coordinate tasks and toward systems that orchestrate intelligence.

- Identify judgment centers, not just cost centers. Before you cut, map where critical decisions are made, where risk is managed, and where meaning is attached to data and content. Those are the last roles you can afford to lose.

- Invest in “steering roles.” Create titles, growth paths, and opportunities for people who own conditioning, authority, automation, and compounding gains. Don’t expect this work to happen on the side.

- Measure de‑skilling as a real risk. If your AI rollout makes it easier for people to push buttons and harder for them to understand what’s happening.

Chris’s optimism is conditional: AI can absolutely be a force multiplier for both organizations and individuals, but only if leaders resist the urge to automate away the very capabilities that make their companies resilient.

Humans matter – more than ever

During the final credits of WALL-E, we see a subtle epilogue play out. Responding to the signal that Earth is once again fertile, humans return from space to farm the land – picking up shovels and “retraining” around these basic forgotten skills. Robots work alongside them, helping to clean up our messes, but not wholly replacing the labor.

AI isn’t going away. In fact, OpenAI, Oracle, and SoftBank expanded the forthcoming Stargate data center project to a $500 billion, 10-gigawatt commitment at the end of 2025. With it comes the promise of AI-centric jobs and opportunities, but there’s still a great deal of uncertainty ahead.

In 2026, the companies that win won’t be the ones that reduce headcount. They’ll be the ones that steer AI with human power – preserving and elevating roles with the right skills to meet the future head-on.

“We want to get better at thinking,” Chris said. “We want to get better at judgment. We want to get better at informing the intuitions that we always had. And that's my hope.”

Upcoming Events

CMS Summit 26

May 12-13, 2026 – Frankfurt, Germany

The best conferences create space for honest, experience-based conversations. Not sales pitches. Not hype. Just thoughtful exchanges between people who spend their days designing, building, running, and evolving digital experiences. CMS Summit brings together people who share real stories from their work and platforms and who are interested in learning from each other on how to make things better. Over two days in Frankfurt, you can expect practitioner-led talks grounded in experience, conversations about trade-offs, constraints, and decisions, and time to compare notes with peers facing similar challenges. Space is limited for this exclusive event, so book your seats today.

Umbraco Codegarden 2026

June 10–11, 2026 – Copenhagen, DK

Join us in Copenhagen (or online) for the biggest Umbraco conference in the world – two full days of learning, genuine conversations, and the kind of inspiration that brings business leaders, developers, and digital creators together. Codegarden 2026 is packed with both business and tech content, from deep-dive workshops and advanced sessions to real-world case studies and strategy talks. You’ll leave with ideas, strategies, and knowledge you can put into practice immediately. Book your tickets today.

Open Source CMS 26

October 20–21, 2026 – Utrecht, Netherlands

Join us for the first annual edition of our prestigious international conference dedicated to making open source CMS better. This event is already being called the “missing gathering place” for the open source CMS community – an international conference with confirmed participants from Europe and North America. Be part of a friendly mix of digital leaders from notable open source CMS projects, agencies, even a few industry analysts who get together to learn, network, and talk about what really matters when it comes to creating better open source CMS projects right now and for the foreseeable future. Book your tickets today.