Why AI-Powered Development Tools Fail After Launch

And It’s Not a Code Problem.

Geetha Rajan is a strategy executive advising Fortune 500 companies on AI adoption, AI transformation, and organizational change, and a CMS Critic contributor.

MIT’s NANDA Initiative dropped a number last year that made a lot of people uncomfortable: 95% of generative AI pilots deliver zero measurable impact on the P&L. Not “underperform.” Zero. Based on 150 executive interviews and analysis of 300 public deployments, the picture is clear: billions spent with little measurable impact.

Then Cleanlab’s survey of nearly 1,900 tech executives and engineers added another layer: only 5% had AI agents in production, and 60–70% were changing their entire AI stack every three months. Not iterating. Replacing.

If you’re building on platforms like Vercel or shipping with AI-assisted tools like Cursor and GitHub Copilot, these numbers should stop you cold. The instinct in the development ecosystem is to treat this as a technical problem—bad models, immature tooling, infrastructure that can’t keep up. Those things are real.

But they’re not what’s killing your projects.

The Misdiagnosis

Here’s what the MIT data shows when you look past the headline. The 5% that succeeded weren’t using better models. They were operating within organizations that had solved a different set of problems—problems unrelated to code.

Successful deployments shared three traits: they empowered line managers to drive adoption, integrated tools into existing workflows rather than requiring wholesale changes, and built distinct enablement strategies for different parts of the organization. They treated AI adoption as an organizational problem, not a technical one.

This is the core premise behind Pilots to Scale™: scaling AI is not about model performance, but about aligning people, workflows, and metrics so experimentation becomes sustained execution.

This distinction matters in software development. Consider Vercel’s v0, now used by over 3.5 million people. The tool is impressive—describe what you want, and the agent plans, builds, and deploys. But even Vercel has acknowledged the emerging challenge: vibe coding is quickly becoming “the world’s largest shadow IT problem.” Employees ship security flaws alongside features, credentials get copied into prompts, and company data goes public with no audit trail.

That’s not a Vercel problem. That’s what happens when powerful tools get adopted faster than organizations can absorb them.

Where Engineering Teams Actually Get Stuck

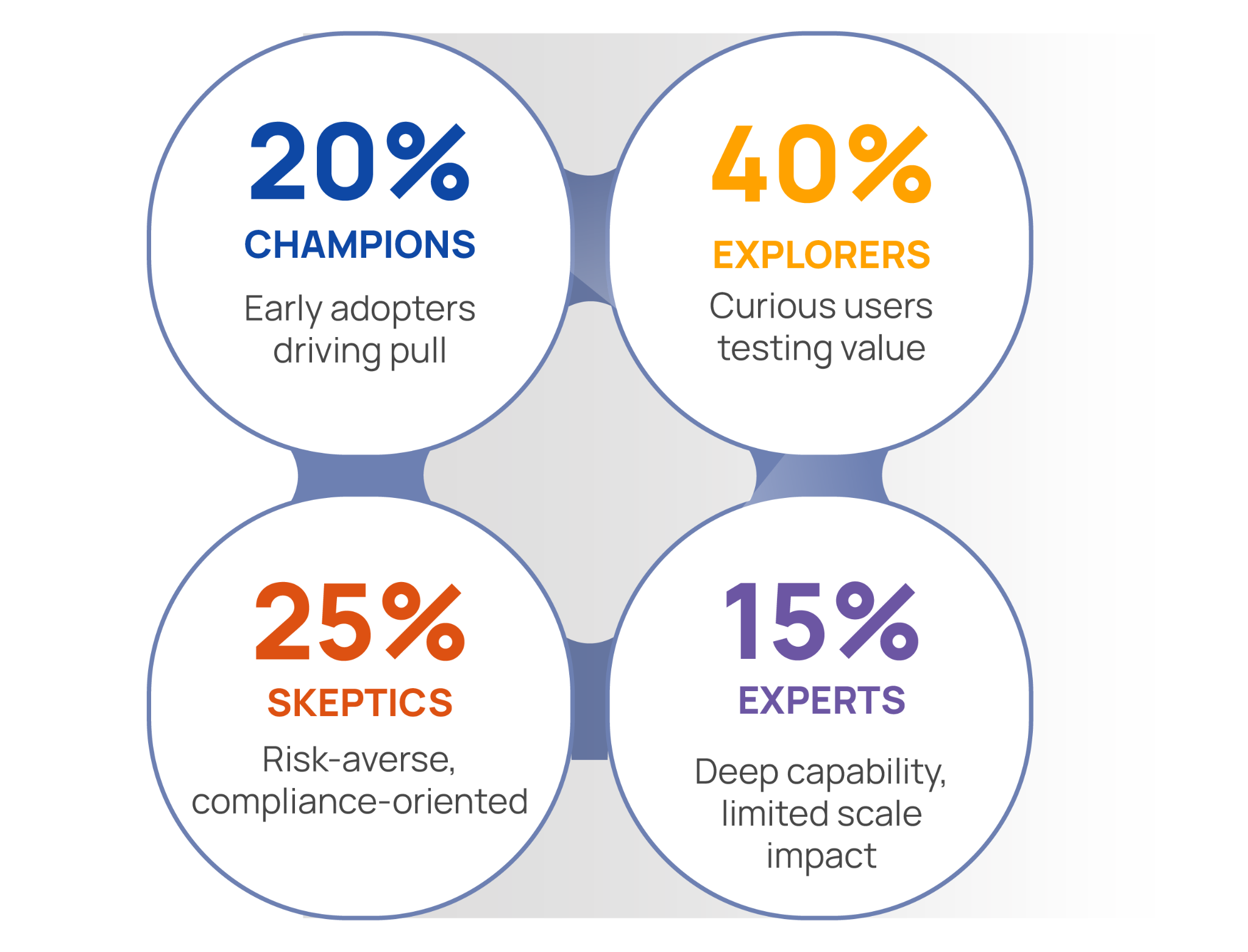

Across hundreds of organizations in the Pilots to Scale™ research, a consistent pattern emerges: the failure point lies between the tool and the team. Organizations fail to recognize that their workforce doesn’t adopt new technology as a monolith. Across any organization—including engineering teams—you find roughly four segments with fundamentally different relationships to AI tools.

- Champions (about 20%) are already experimenting. They’ve been using Copilot since day one.

- Explorers (about 40%) are curious but stuck. They’ve seen the demos but don’t know how to go from “that’s cool” to “this is how I work now.”

- Skeptics (about 25%) aren’t irrational. They’ve watched previous tooling waves come and go, and they’re waiting for proof.

- Experts (about 15%) have deep technical fluency but may focus on custom builds when off-the-shelf tools would scale faster.

Recognizing and enabling these segments differently is one of the central design principles of Pilots to Scale™. It’s also where most enterprise rollouts quietly fail.

Most engineering leaders treat all four groups the same. Universal rollouts. One training program. A single Slack channel. Then they wonder why adoption stalls at 15%—exactly the size of the group that was going to adopt anyway.

The 40% in the middle—the Explorers—is where scale comes from. Reaching them requires structured experimentation with guardrails, protected time to learn, and clear definitions of success beyond “ship faster.”

Exhibit 1: The four workforce segments that determine AI adoption outcomes. Most organizations treat their teams as a single population. The Pilots to Scale™ research identifies four distinct cohorts—each requiring a different enablement strategy. The 40% in the middle is where scale breaks through or stalls.

Source: Independent research by Geetha Rajan, including quantitative survey data (n=100) and in-depth interviews (n=200) with operational leaders responsible for AI implementation.

What the 5% Do Differently

The organizations that successfully scale AI build three capabilities that have nothing to do with model selection or infrastructure.

First, they translate AI capabilities into actual workflow changes. They don’t bolt Copilot onto existing processes and hope for improvement. They redesign how work gets done—code review, testing, deployment—around what AI makes possible. Most teams skip this entirely.

Second, they mandate cross-functional coordination. AI-powered development touches security, compliance, product, and design. Successful deployments had cross-functional governance from day one, not bolted on after a security incident. As Cleanlab’s CEO, Curtis Northcutt put it: the moment you think you’ve figured it out is the moment you fall behind. That’s an organizational insight, not a technical one.

Third, they invest in segment-specific enablement. Champions become peer coaches. Explorers get structured 30-day experiments with weekly check-ins. Skeptics are paired with champions who demonstrate concrete value. One enterprise software company I studied segmented its team this way and saw adoption jump from 12% to 73% in one quarter. Same tools. Same org. Different strategy.

The Agentic Refactoring Loop

Cleanlab’s data about constant stack refactoring is real—and it creates a vicious loop. Teams build an agent; it breaks on messy enterprise data. They refactor. A better LLM drops. They rebuild. Workflows shift. They refactor again. Between 60% and 70% of executives surveyed were swapping out their entire AI stack every three months. That’s not iteration. That’s a treadmill.

The organizations that break out of this loop aren’t the ones with more stable technology. They’re the ones that built organizational infrastructure to absorb churn—teams that evaluate, adopt, and sunset tools in structured ways. When your people trust that AI adoption is being managed, not just mandated, they refactor faster and flag problems earlier. The constraint isn’t the technology’s speed. It’s the organization’s ability to metabolize it.

The Bottom Line

The tools are here. Vercel’s v0, Cursor, Copilot, Windsurf—capable and improving rapidly. The 95% failure rate isn’t a verdict on the technology. It’s a verdict on how organizations adopt it.

If you’re a development leader wondering why your team’s AI adoption looks great in demos and stalls in production, stop looking at the stack. Start looking at your people—who they are, what they need, and whether you’re building the organizational muscle to let them actually use these tools. The code problem is largely solved. The organizational problem is just getting started.

Upcoming Events

JoomlaDay USA 2026

April 29 - May 2, 2026 – Delray Beach, Florida

Be part of the Joomla community in one of the most iconic cities in the world! JoomlaDay USA 2026 is coming to Delray Beach, and you can join us for a dynamic event packed with insights, workshops, and networking opportunities. Learn from top Joomla experts and developers offering valuable insights and real-world solutions. Participate in interactive workshops and sessions and enhance your skills in Joomla management, development, design, and more. And connect with fellow Joomla enthusiasts, developers, and professionals from across the world. Book your seats today.

CMS Summit 26

May 12-13, 2026 – Frankfurt, Germany

The best conferences create space for honest, experience-based conversations. Not sales pitches. Not hype. Just thoughtful exchanges between people who spend their days designing, building, running, and evolving digital experiences. CMS Summit brings together people who share real stories from their work and platforms and who are interested in learning from each other on how to make things better. Over two days in Frankfurt, you can expect practitioner-led talks grounded in experience, conversations about trade-offs, constraints, and decisions, and time to compare notes with peers facing similar challenges. Space is limited for this exclusive event, so book your seats today.

Umbraco Codegarden 2026

June 10–11, 2026 – Copenhagen, DK

Join us in Copenhagen (or online) for the biggest Umbraco conference in the world – two full days of learning, genuine conversations, and the kind of inspiration that brings business leaders, developers, and digital creators together. Codegarden 2026 is packed with both business and tech content, from deep-dive workshops and advanced sessions to real-world case studies and strategy talks. You’ll leave with ideas, strategies, and knowledge you can put into practice immediately. Book your tickets today.

Open Source CMS 26

October 20–21, 2026 – Utrecht, Netherlands

Join us for the first annual edition of our prestigious international conference dedicated to making open source CMS better. This event is already being called the “missing gathering place” for the open source CMS community – an international conference with confirmed participants from Europe and North America. Be part of a friendly mix of digital leaders from notable open source CMS projects, agencies, even a few industry analysts who get together to learn, network, and talk about what really matters when it comes to creating better open source CMS projects right now and for the foreseeable future. Book your tickets today.